|

|

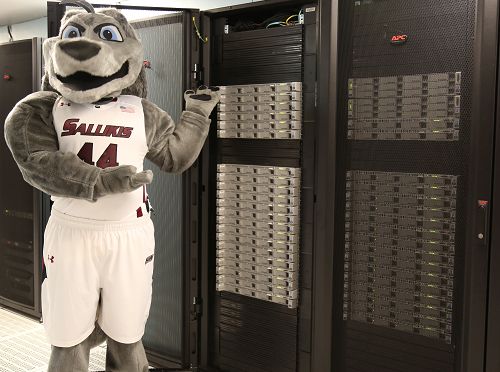

HIGH-PERFORMANCE Computing (HPC) Facilities |

|

|

Equipment Available (In-house and Off-Campus Access) (1) Southern Illinois High-Performance Computing Research Infrastructure (SIHPCI). Dell Linux cluster (106 nodes, Intel Xeon dual CPU quad-core 2.3 GHz, 8 GB RAM, and 90 TB storage) dubbed Maxwell. It was awarded by NSF Computing Research Infrastructure (CRI) Division in 2009.

Here is a link to Tutorial

on How to Use the Cluster Requesting an Account on Maxwell: Send an email to Professor Shaikh Ahmed (ahmed@siu.edu) Provide the following information:

# Your Name # Affiliation # IP address of the computer(s) on SIUC network from which you would access Maxwell # A short paragraph on research you will perform and software tools to be used

You will receive an email with login information Host: maxwell.ecehpc.siuc.edu Please read throuh the

tutorial (link given above) on how to use the

machine VERY

IMPORTANT: PLEASE NOTE THAT ... (3) It would be the user's

responsibility to learn how to use the cluster

properly and please be advised that the user

should always transfer and backup her/his data/program

routinely in a local (PC/Mac/Linux) drive.

For System Administrators: Here is the link to SIHPCI webpage (You can access it only from within SIUC network; From outside use VPN) Here is the real-time Ganglia report for maxwell cluster usage...(You can access it only from within SIUC network; From outside use VPN) The following links are for administrative purpose: Platform Computing: http://maxwell.ecehpc.siuc.edu:8080/platform/

Documentation:

http://maxwell.ecehpc.siuc.edu/cgi-bin/portal.cgi

(2) SIU

Office of Information Technology: The Office of Information

Technology (OIT) is the primary provider of IT

resources and support for Southern Illinois

University Carbondale (SIUC). The Network

Engineering division under the OIT is responsible

for managing and maintaining network

infrastructures for the Camus Area Network, which

include both wired and wireless networks. The team

offers various services to support not only

network usage but also related researches across

the campus such as Network Infrastructure,

Wireless Connection, QoS & VoIP, VPN, and

IP/Subnet Request. The proposed research will

utilize the campus network and certain

aforementioned network services to support

developed services or applications. The OIT at

SIUC can effectively support the research project

by coordinating relevant network services on

campus. The IT also possesses an HPC system based

on Cisco Unified Computing System (UCS) solution,

which includes 40 C-Series nodes, Intel XEON E-5

processors, 64GB RAM/node, 20 cores/node, 46 TB of disk space

(http://oit.siu.edu/rcc/bigdog/). (3) In-house

25-node, dual CPU quad-core 2.3 GHz, 16GB RAM

Intel Xeon cluster dubbed octopus. All

200 cores are dedicated to nanostructures modeling

simulations. Here are the complete cluster

specifications.

(4) Access to the NSF's nanoHUB.org computational workspace. (5) Access to the NSF's XSEDE computational workspaces.

(6) Access to the Oak Ridge National Lab's HPC machines. Here is the Information page for Jaguar@ORNL. Here is the Jaguar User Guide. ORNL Science Links: materials@ORNL DoE@ORNL materials for harsh environments (7)

Access to in-house Synopsis and Cadence design

tools. (8) A large, recently renovated modern research laboratory of about 1100 sq. ft. in the Engineering building at SIU. The lab has office spaces and setups (PCs, printers, etc.) for at least 10 personnel.

|

||

Last Updated: May 01, 2017. Copyright © 2015 Department of Electrical and Computer Engineering: 1230 Lincoln Drive MC 6603, Carbondale, IL 62901. All rights reserved.